Agents, LLMs, vector stores, custom logic—visibility can’t stop at the model call.

Get the context you need to debug failures, optimize performance, and keep AI features reliable.

Agents, LLMs, vector stores, custom logic—visibility can’t stop at the model call.

Get the context you need to debug failures, optimize performance, and keep AI features reliable.

Tolerated by 4 million developers

LLM endpoints can silently fail with downtimes, transient API errors, or rate limits. Imagine your AI-powered search, chat, or summarization just stopping without explanation.

Sentry monitors for these failures and alerts you instantly in real-time, and gets you to the line of code, the suspect commit, and the developer who owns it, so you can fix them before your users are impacted.

When something breaks in your AI agent, whether a tool fails silently, a model times out, is slow to respond, or it returns malformed outputs, traditional logs don’t show the full picture.

Sentry gives you complete visibility into the agent run: prompts, model calls, tool spans, and raw outputs, all linked to the user action that triggered them. You can see what failed, why it happened, and how it affected downstream behavior, making it easier to debug issues and design smarter fallbacks. Sentry makes it easy to spot slowdowns, debug performance issues, and keep your AI features fast and reliable for users.

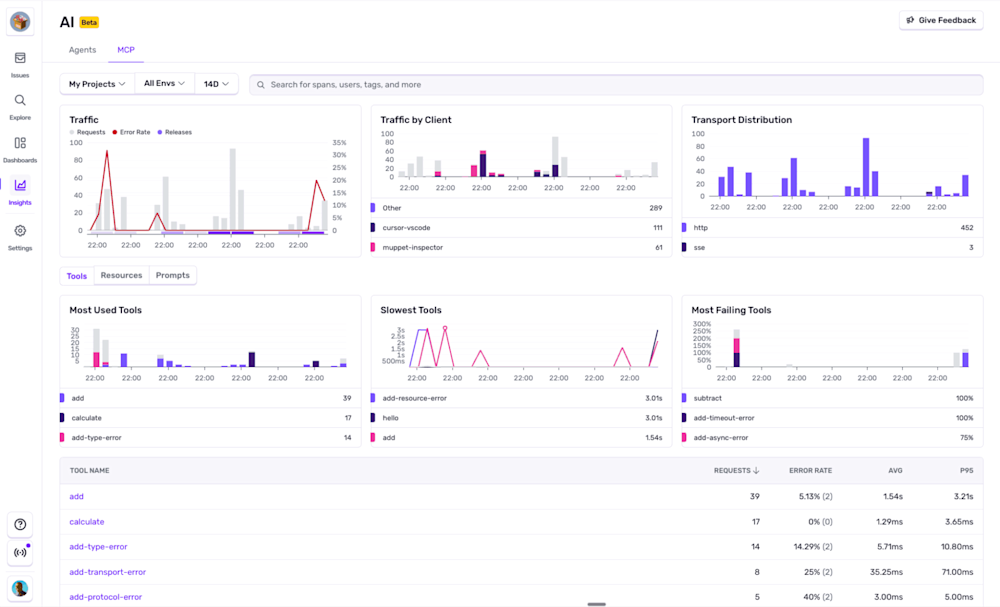

Your MCP server is where AI agents meet the real world. When something goes wrong, it’s not always obvious where to look. With Sentry’s MCP monitoring, you get protocol-aware visibility into every client, tool, and request hitting your server.

See which tools are failing, which transports are slowing down, and which clients are over-using your endpoints. Sentry surfaces the issues your logs miss, so you can fix problems before users, or their agents, notice. One line of code gives you end-to-end insight, no guesswork required.

We support every technology (except the ones we don't).

Get started with just a few lines of code.

Install sentry-sdk from PyPI:

pip install "sentry-sdk"

Add OpenAIAgentsIntegration() to your integrations list:

import sentry_sdk from sentry_sdk.integrations.openai_agents import OpenAIAgentsIntegration sentry_sdk.init( # Configure your DSN dsn="https://examplePublicKey@o0.ingest.sentry.io/0", # Add data like inputs and responses to/from LLMs and tools; # see https://docs.sentry.io/platforms/python/data-management/data-collected/ for more info send_default_pii=True, integrations=[ OpenAIAgentsIntegration(), ], )

That's it. Check out our documentation to ensure you have the latest instructions.

increase in developer productivity

engineers rely on Sentry to ship code

faster incident resolution

Get started with the only application monitoring platform that empowers developers to fix application problems without compromising on velocity.